Securing Federated Learning

A comprehensive framework to enhance security, reliability, and privacy in Federated Learning systems.

HydraGuard

Hybrid defense against backdoor attacks

SECUNID

Filters out malicious updates in FL

CodeNexa

Prevents Man-in-the-Middle attacks

SHIELD

Protects VFL from label inference attacks

Security Framework for Federated Learning

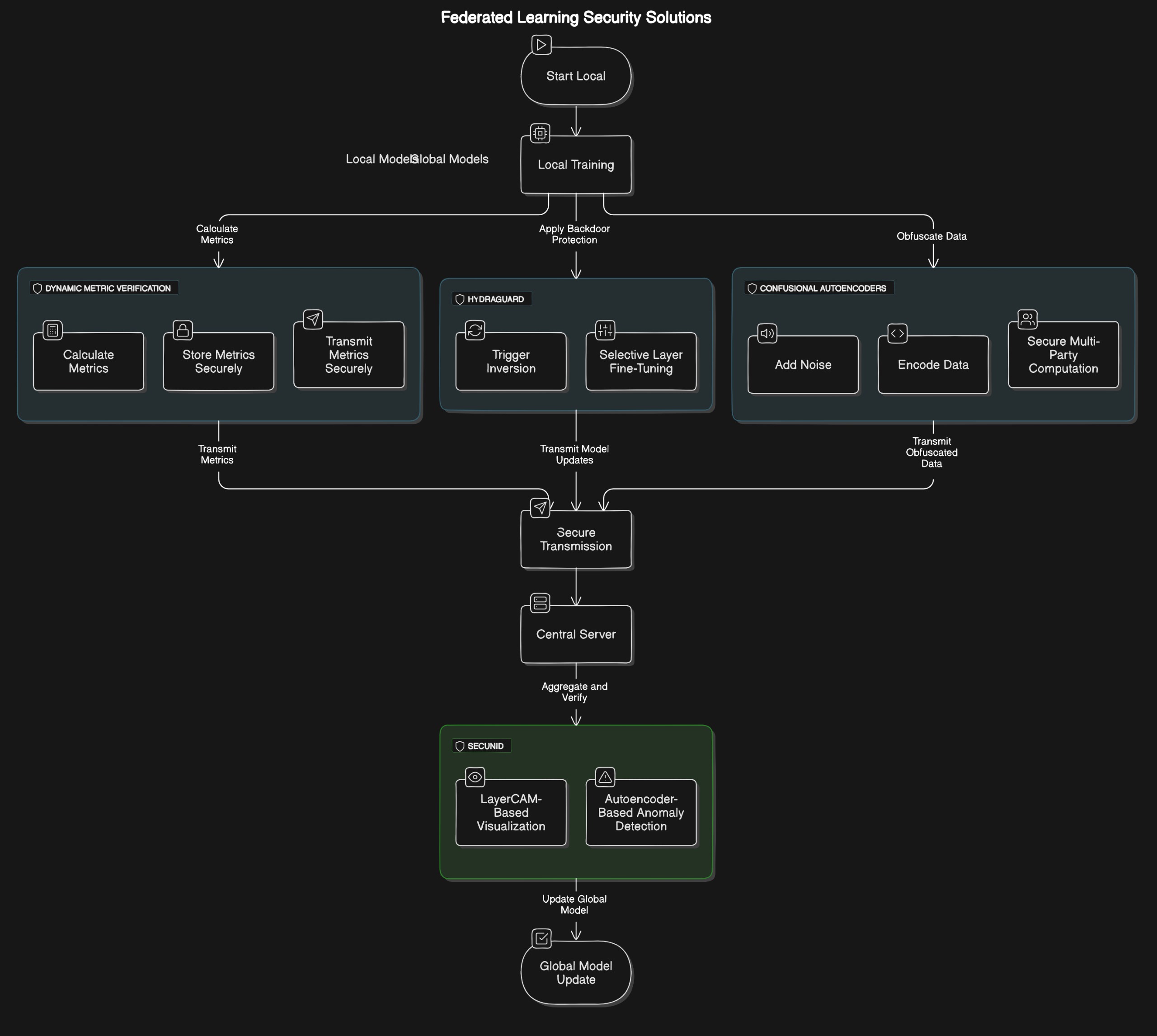

Federated Learning (FL) facilitates decentralized model training across various clients while safeguarding data privacy, but it poses significant security risks, such as backdoor attacks, poisoning attacks, Man-in-the-Middle (MITM) attacks, and label inference attacks. This research introduces a security framework with four core components, each tailored to mitigate specific threats in FL environments.

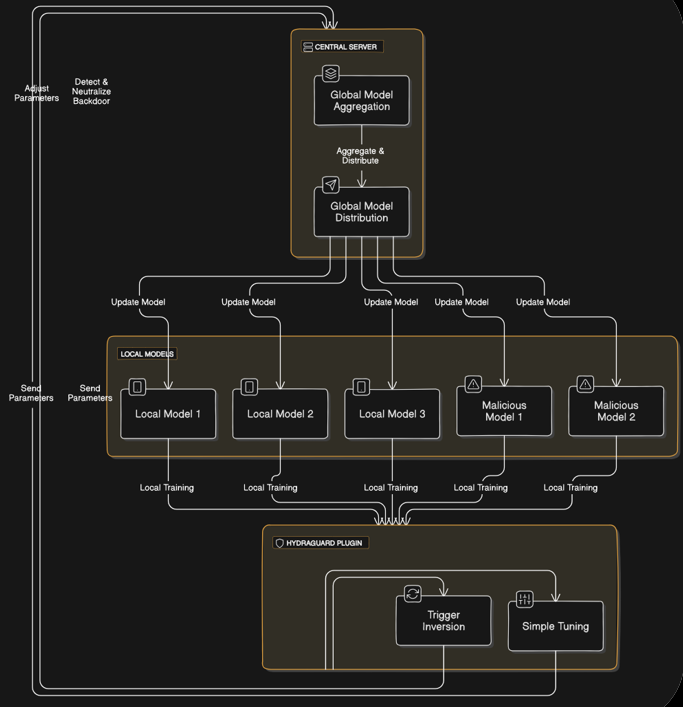

HydraGuard is a hybrid defense against backdoor attacks, combining Trigger Inversion and Simple Tuning methods. Trigger Inversion identifies potential backdoor triggers by reconstructing patterns from model gradients, while Simple Tuning adjusts specific layers of the model to neutralize these threats. This dual approach reduces backdoor attack success rates while maintaining model accuracy.

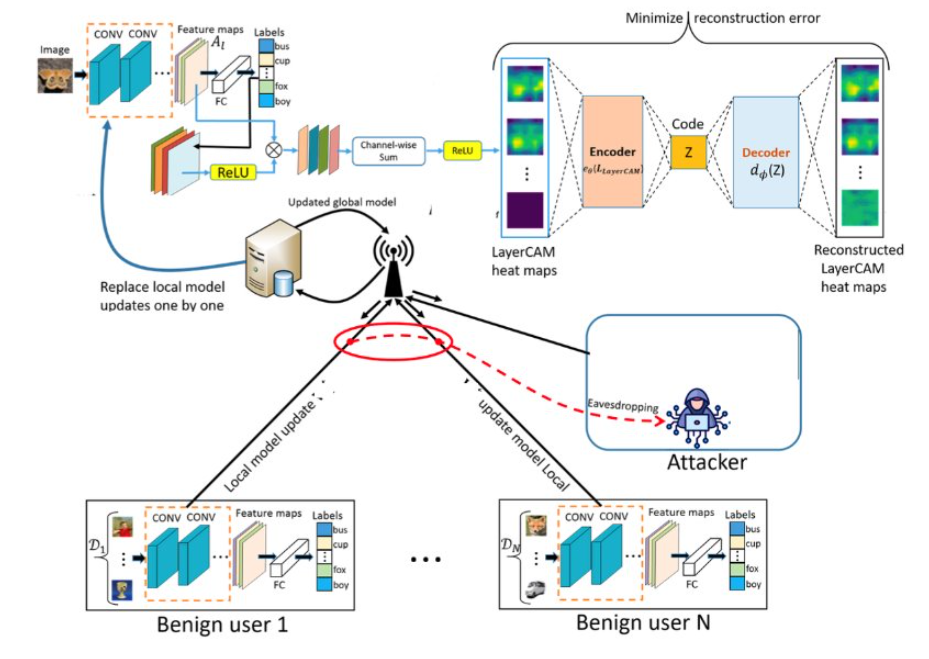

SECUNID addresses data and model poisoning attacks by filtering out malicious updates before they are integrated into the global model. It utilizes LayerCAM, a visual interpretability tool, and an Autoencoder to detect and exclude anomalies, ensuring only legitimate updates contribute to the model.

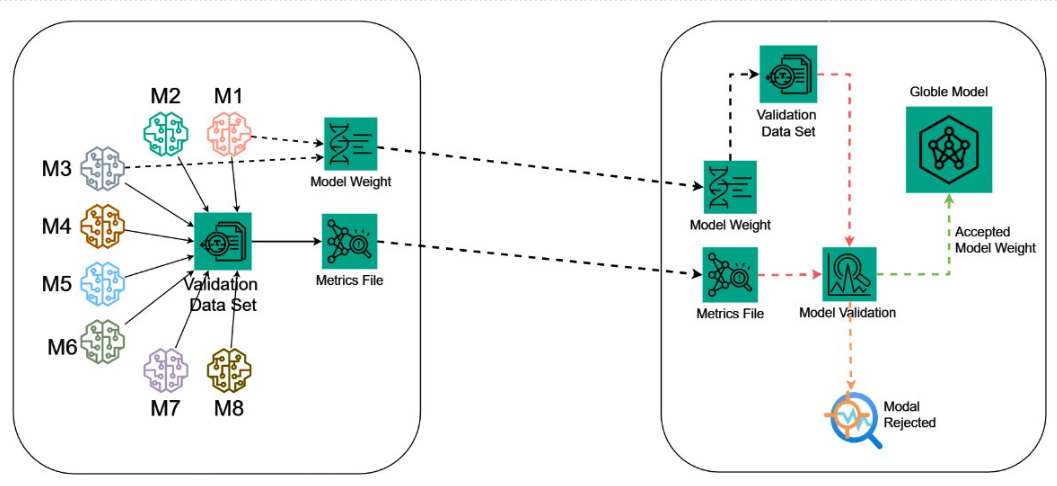

CodeNexa prevents MITM attacks by implementing dynamic metric verification, which encrypts and validates key performance metrics during FL, safeguarding the system from unauthorized alterations during communication.

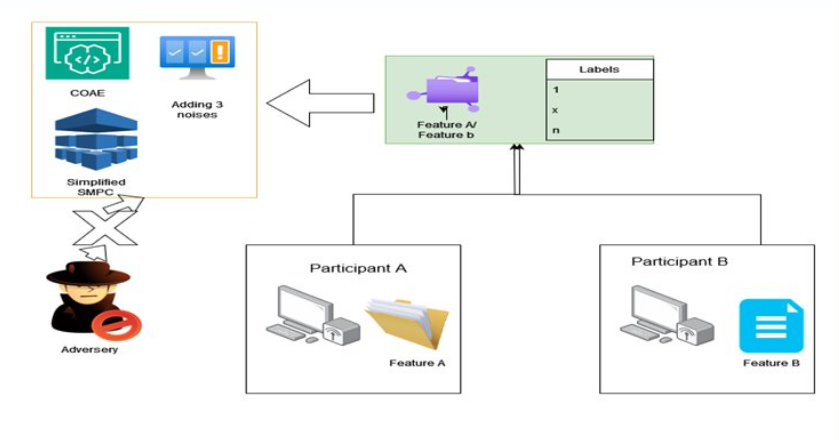

SHIELD protects Vertical Federated Learning (VFL) systems from label inference attacks using confusional autoencoders, noise addition, and a simplified Secure Multi-Party Computation (SMC) framework. These techniques obscure data representations, reducing the risk of sensitive label inference.

Background

Federated Learning (FL) is a revolutionary approach in machine learning that addresses data privacy concerns. Introduced by Google in 2016, FL allows multiple clients to collaboratively train a global model without centralized data storage, ensuring sensitive data remains on the client side.

FL has found significant adoption in industries like healthcare and finance, where data privacy is crucial for compliance with regulations such as HIPAA.

Problem Statement

Code Nexa

Click to see details

Description

Dynamic Nature of Federated Learning (FL). Continuous Model Integrity Verification. Protection Against MITM Attacks

Hydraguard

Click to see details

Description

Continuous attacks are more aggressive than single-shot attacks. Detecting and Rejecting malicious weights leads to data loss, and data breaches and reduces module accuracy. Existing defense mechanisms need big computational power and violate the essence of the FL. Unreliable Predictions.

Secunid

Click to see details

Description

Existing defenses, such as distance-based metrics (e.g., Krum, Trimmed-Mean), struggle to detect sophisticated attacks where malicious updates resemble legitimate ones. Attackers alter their local model updates during training, sending manipulated updates to the central server, which degrades the global model's accuracy and reliability. Current methods usually require some knowledge of the attacks, Malicious participant ratio Examining local datasets (compromise the privacy of participants) Assuming IID data.

SHIELD

Click to see details

Description

Limited Defense Mechanisms: Current VFL security protocols are not robust enough to fully prevent label inference attacks, leading to potential data breaches. Lack of scalable solutions that balance privacy and performance. Insufficient Mitigation Strategies: Existing solutions may not effectively address all types of label inference attacks, especially sophisticated passive and active forms. Existing methods for defending against label inference attacks in VFL are computationally expensive (e.g., Secure Multi-Party Computation). Insufficient focus on lightweight mechanisms for privacy preservation in collaborative learning environments.

Proposed Solution

- 1.

HydraGuard

Mitigates backdoor attacks through trigger inversion and simple tuning, ensuring minimal performance impact.

- 2.

SECUNID

Identifies poisoned data updates using LayerCAM heatmaps and Autoencoders for anomaly detection.

- 3.

CodeNexa

Uses dynamic metric verification and encryption to prevent MITM attacks during model communication.

- 4.

SHIELD

Defends Vertical Federated Learning (VFL) from label inference attacks through confusional autoencoders and noise injection.

Detect and Neutralize Backdoor Attacks

Implementing HydraGuard to identify and mitigate backdoor patterns through trigger inversion and simple tuning.

Mitigate Data and Model Poisoning

Using SECUNID with LayerCAM heatmaps and Autoencoders to filter out compromised model updates.

Secure Model Communication

CodeNexa ensures encrypted data exchange through dynamic metric verification and AES encryption to prevent MITM attacks.

Protect Against Label Inference in VFL

SHIELD utilizes confusional autoencoders and noise injection to secure sensitive data in collaborative environments.

Methodology

The proposed security framework comprises four sub-components, each designed to address specific security threats in Federated Learning.

HydraGuard

Sub Component

Detects backdoor triggers and fine-tunes model layers to remove their effect.

SECUNID

Sub Component

Uses visual interpretability tools like LayerCAM to create heatmaps, analyzed by Autoencoders to identify anomalies.

CodeNexa

Sub Component

Encrypts model updates and validates metrics during transmission to prevent MITM attacks.

SHIELD

Sub Component

Secures VFL systems by obfuscating data using confusional autoencoders and injecting noise to prevent label inference.

Technologies Used

Python

React

Tensorflow

Keras

MongoDB

VS Code

Google Cloud

GitHub

Docker

Google Colab

Amazon AWS

OpenAI

Timeline in Brief

February 2024

Project Charter & Proposal

Project charter submission followed by proposal presentation and detailed report submission.

Progress: 100

100%May 2024

Progress Presentation I

First progress review including presentation and status documentation.

Progress: 100

100%June 2024

Research Paper

Submission of initial research paper documenting findings and methodology.

Progress: 100

100%September 2024

Progress Presentation II

Second progress review with updated status documentation.

Progress: 100

100%October 2024

Final Presentation & Website

Project website launch and final presentation with viva assessment.

Progress: 90

90%November 2024

Research Documentation

Research paper registration and logbook submission.

Progress: 100

100%December 2024

Final Report

Submission of proofread final report documenting complete project outcomes.

Progress: 100

100%

Downloads

Please find all documents related to this project below.

Our Team

B.L.H.D. Peiris

Team LeaderUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

J.P.A.S. Pathmendre

Team MemberUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

A.R.W.M.V. Hasaranga

Team MemberUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

A.M.I.R.B. Athauda

Team MemberUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

Mr. Kanishka Yapa

Supervisor | LecturerUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

Mr. Samadhi Rathnayake

Co-Supervisor | LecturerUniversity: Sri Lanka Institute of Information Technology

Department: Information Systems Engineering

Achievements

Our research CodeNEXA : A Novel Security Framework for Federated Learning to Mitigate Man-in-the-Middle Attacks was selected for the IEEE UEMCON Awards 2024 conference, and we have already presented our paper at the event.

Our research paper Project ALVI : Privacy-Driven Defenses Federated Learning Security & Authentication was selected for the ICAC 2024 conference, and we are now ready to present it at the conference.

Our research paper HydraGuard: Backdoor immunity in FL Environments was selected for the SNAMS 2024 conference, and we are now ready to present it at the event.

Contact Us

Contact Details

For further queries please reach us at project.alvi@gmail.com

Hope this project helped you in some manner. Thank you!

- Project ALVI Team 👾